Introduction

Data is only as valuable as its freshness. In a fast-moving business environment, relying on outdated or inaccurate data can quietly drain profits, mislead decision-makers, and erode competitive advantage. For CEOs, business analysts, and data consultants, recognizing the hidden cost of stale data is the first step toward building analytics systems that drive real impact.

When data stops reflecting current realities, every insight becomes a liability rather than an asset. The danger lies in how silently it happens teams continue to analyze numbers that no longer represent today’s customers, markets, or performance.

What Is Stale Data?

Stale data refers to information that has aged beyond its usefulness. It could be weeks, months, or years old depending on the industry and system. For example, in e-commerce, even a few hours of delay in updating stock levels or customer behavior can lead to lost revenue opportunities.

Common sources of stale data include:

- Outdated databases that lack auto-refresh mechanisms

- Manual data entry prone to delay and errors

- Disconnected data pipelines and legacy systems

- Infrequent synchronization between platforms

The Real Cost of Stale Data

Stale data impacts businesses in ways that are often underestimated:

1. Poor Decision-Making

Executives make strategic calls based on historical data that no longer reflects market realities, leading to misaligned goals and wasted budgets.

2. Financial Losses

Companies experience losses due to wrong pricing decisions, poor demand forecasting, or targeting the wrong customer segments.

3. Damaged Customer Experience

If customer preferences have shifted and your analytics still reflect old behavior, marketing and personalization efforts will fail to engage.

4. Reduced Operational Efficiency

Teams waste time cleaning, verifying, and reconciling inconsistent datasets instead of generating insights.

5. Reputational Damage

Inaccurate reports or delayed responses to market changes can reduce stakeholder trust and credibility.

According to Gartner Data Quality Reports, poor data quality costs organizations an average of $12.9 million annually a clear sign that inaction is expensive.

How to Detect Stale Data Early

Early detection of stale data requires continuous monitoring and validation systems. Warning signs include:

- Conflicting reports across departments

- Declining accuracy in predictive models

- Manual interventions needed to fix missing values

- Delays between data capture and analytics output

Automation tools can flag data anomalies, detect latency in pipelines, and trigger alerts for irregular update cycles.

Strategies to Prevent Stale Data

1. Implement Real-Time Data Pipelines

Use streaming technologies and APIs to ensure data flows continuously between sources and analytics tools.

2. Schedule Regular Data Audits

Set up quarterly audits to verify data accuracy and identify outdated datasets across systems.

3. Automate Data Validation

Leverage automated scripts or AI-powered tools that detect inconsistencies and clean data before it’s analyzed.

4. Invest in Cloud Integration

Cloud platforms such as AWS, Google Cloud, or Azure make it easier to maintain real-time synchronization and scalability.

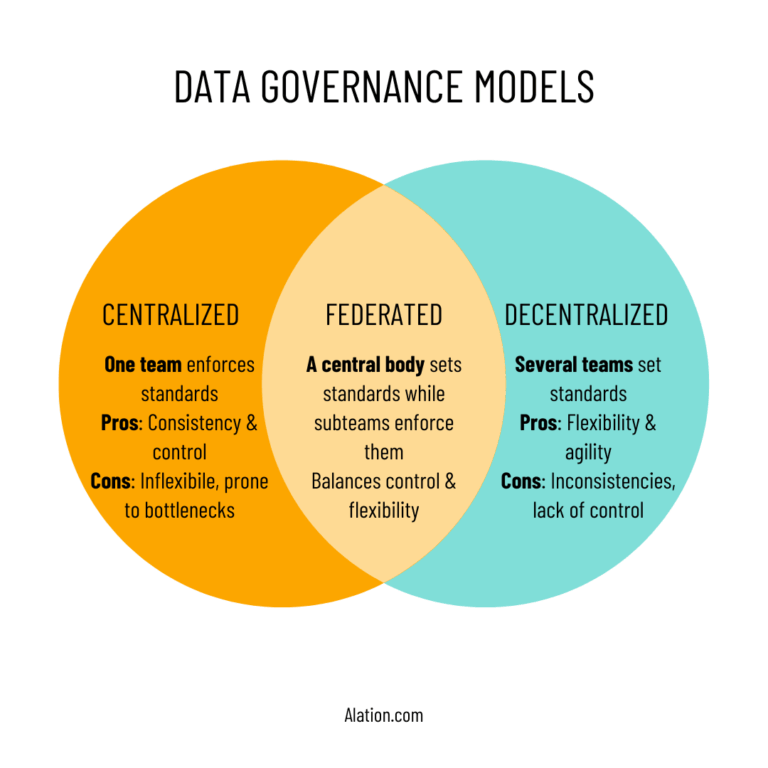

5. Build a Data Governance Framework

Define clear roles, responsibilities, and protocols for data handling to ensure continuous freshness and reliability.

The Business Advantage of Fresh Data

Companies that prioritize data freshness see improved responsiveness, stronger forecasting accuracy, and higher customer retention. Fresh data enables machine learning models to stay relevant, dashboards to reflect reality, and executives to make decisions grounded in truth.

To stay ahead, organizations must build a culture of continuous data improvement where systems, people, and policies work together to maintain data quality at all times.

Frequently Asked Questions

1. How can I tell if my analytics platform is using stale data?

Check how often your datasets update. If it’s not real-time or at least daily, you may already be working with outdated information.

2. What tools help prevent stale data?

Use ETL automation tools, API integrations, and real-time dashboards that connect directly to live data sources.

3. How often should we review data quality?

Perform quarterly data validation and annual system audits to ensure consistency and freshness.

Conclusion

Stale data doesn’t just weaken analytics it silently undermines growth. Executives who invest in modern data systems not only improve performance but also gain a critical edge in strategy and innovation. The path to reliable insights begins with one decision: keep your data fresh.

Call to Action: Don’t let outdated information slow your business down. Visit our Data Quality & Validation Solutions page to learn how we can help you maintain accurate, up-to-date analytics for every decision.