Introduction

Data driven organizations depend on accurate and reliable information to make decisions at speed. Yet as data volumes increase and pipelines become more complex, errors and anomalies are no longer easy to spot using manual checks or static rules. Inconsistent values, missing records, unexpected spikes, and silent data drift can undermine analytics, AI models, and executive confidence.

AI for data validation has emerged as a powerful solution to this challenge. By learning normal data patterns and detecting deviations in real time, AI driven systems help enterprises identify issues early, reduce manual effort, and protect decision making at scale. For global organizations operating complex data ecosystems, anomaly detection is becoming a core capability rather than a nice to have.

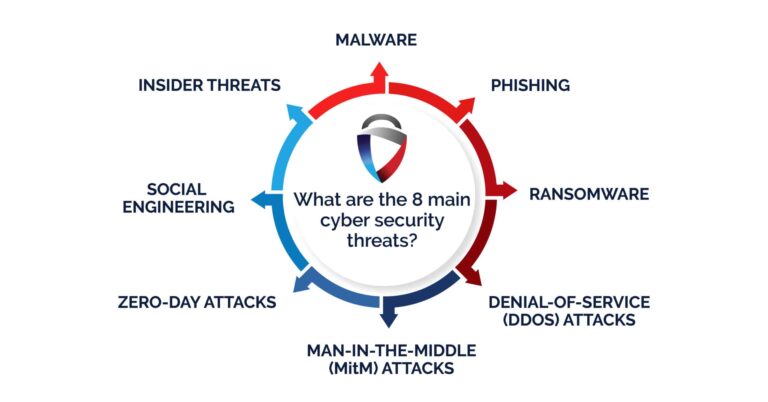

What Are Data Anomalies

Data anomalies are values or patterns that deviate from expected behavior. These deviations may indicate errors, system failures, integration issues, or even malicious activity. In modern analytics environments, anomalies are often subtle and distributed across multiple systems.

Common types of data anomalies include:

- Sudden spikes or drops in key metrics

- Missing or incomplete records

- Duplicate or inconsistent values

- Unexpected changes in data distributions

- Gradual data drift over time

Left undetected, these issues propagate through dashboards, reports, and AI models, leading to inaccurate insights and poor decisions.

Why Traditional Data Validation Falls Short

Rule based validation has long been the foundation of data quality management. While effective for simple checks, it struggles in modern environments. Static rules cannot easily adapt to changing data patterns, new sources, or evolving business behavior.

Key limitations of traditional approaches include:

- High maintenance effort as rules must be updated manually

- Limited ability to detect unknown or emerging issues

- Delayed detection in batch oriented systems

- Poor scalability across large and complex datasets

As enterprises move toward real time analytics and AI driven decision making, these limitations become more costly and more visible.

How AI for Data Validation Works

AI based data validation uses machine learning models to learn what normal data behavior looks like across time, sources, and dimensions. Instead of relying solely on predefined rules, these systems adapt continuously as data evolves.

Core techniques include:

- Statistical learning to model normal ranges and distributions

- Unsupervised learning to identify unusual patterns

- Time series analysis to detect sudden or gradual changes

- Clustering to spot outliers across complex datasets

Once trained, AI models monitor data streams and flag anomalies in near real time, allowing teams to act before issues escalate.

Key Benefits of AI Driven Anomaly Detection

AI for data validation delivers value across technical and business dimensions.

Early Issue Detection

By identifying anomalies as they occur, AI systems prevent errors from reaching dashboards, reports, or downstream applications. This reduces rework and protects trust in analytics.

Reduced Manual Effort

Automation eliminates the need for constant manual checks and rule updates. Data teams can focus on analysis and improvement rather than firefighting.

Improved Analytics and AI Accuracy

Reliable data improves the performance of predictive models, forecasting systems, and decision support tools. AI driven validation ensures models learn from clean and consistent inputs.

Scalability Across Data Ecosystems

AI systems scale easily across thousands of tables, streams, and metrics, making them well suited for large enterprises and multi cloud environments.

Organizations often combine AI driven validation with broader data quality and governance initiatives such as those supported through https://dataguruanalytics.org/data-quality-validation-solutions.

Use Cases for AI Based Data Anomaly Detection

AI powered anomaly detection is already delivering measurable value across industries.

Common enterprise use cases include:

- Monitoring financial transactions for unusual patterns

- Detecting data pipeline failures in real time

- Identifying inconsistencies in customer and operational data

- Validating sensor and IoT data streams

- Protecting training data used in machine learning models

In each case, speed and accuracy are critical. AI enables both at scale.

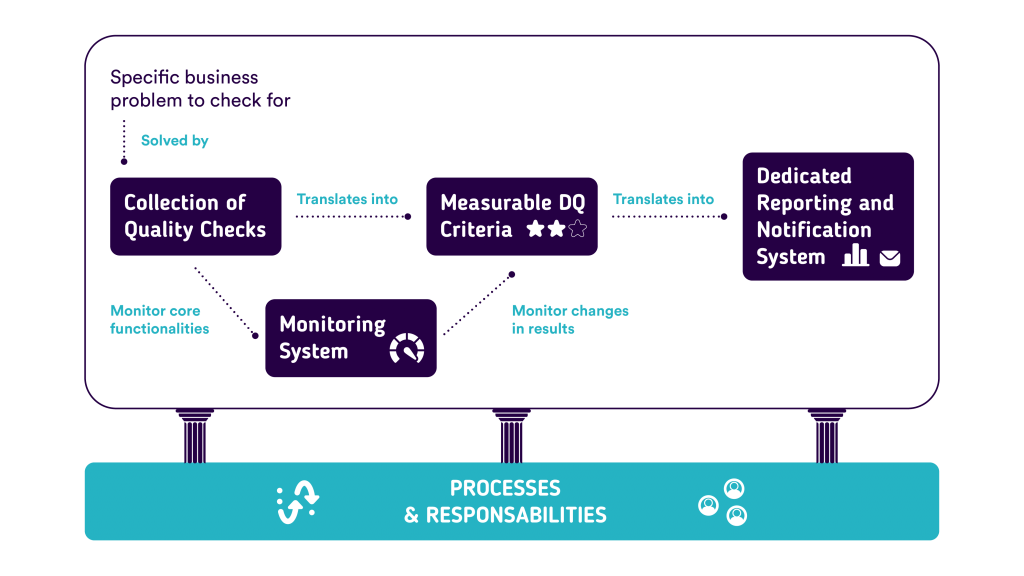

Integrating AI Validation into Data Pipelines

To be effective, AI driven validation must be embedded directly into data pipelines rather than treated as a downstream check. This allows anomalies to be detected before data is consumed by analytics tools or applications.

Best practices include:

- Integrating validation at ingestion and transformation stages

- Combining AI models with basic rule checks for critical thresholds

- Logging and alerting anomalies with clear severity levels

- Linking alerts to remediation workflows

This integrated approach reduces latency between detection and resolution.

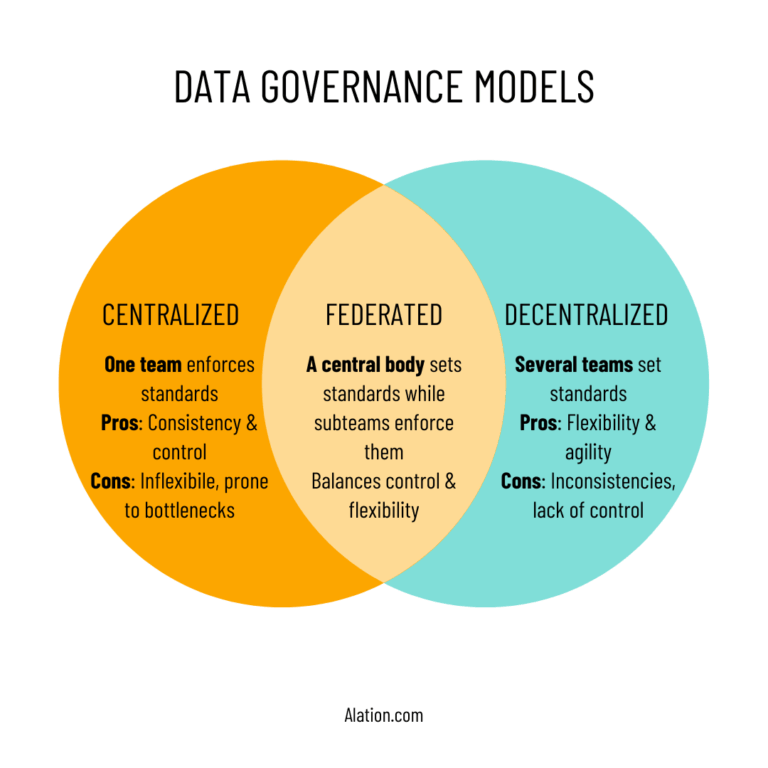

Governance and Trust in AI Driven Validation

Automation does not remove the need for governance. In fact, AI based validation requires strong oversight to ensure transparency, accountability, and alignment with business goals.

Key governance considerations include:

- Clear ownership of data quality outcomes

- Explainability of anomaly detection results

- Defined escalation and response procedures

- Continuous monitoring of model performance

Enterprises often align AI validation initiatives with centralized governance frameworks and advisory support such as https://dataguruanalytics.org/services/research-consultancy/ to ensure long term sustainability.

Common Challenges and How to Address Them

While powerful, AI driven anomaly detection is not without challenges.

Common issues include:

- False positives during early model training

- Limited understanding of detected anomalies

- Resistance from teams unfamiliar with AI systems

- Inconsistent data definitions across sources

These challenges can be addressed through phased implementation, stakeholder education, and continuous tuning of models. Combining AI with human oversight ensures balance between automation and control.

Measuring the Impact of AI for Data Validation

Enterprises should track clear metrics to assess the effectiveness of AI based validation.

Key indicators include:

- Reduction in data quality incidents

- Faster detection and resolution times

- Improved accuracy of analytics and reports

- Increased confidence in AI and predictive models

- Lower operational effort spent on data cleaning

These outcomes demonstrate whether automation is delivering tangible business value.

Frequently Asked Questions

Can AI detect all types of data anomalies

AI is highly effective at detecting patterns and deviations, but it works best when combined with basic rules and domain expertise.

Is AI for data validation suitable for regulated industries

Yes. When governed properly, AI driven validation improves auditability and consistency in regulated environments.

How long does it take to implement AI based anomaly detection

Initial implementations can deliver value within a few months, with accuracy improving as models learn over time.

Conclusion

As data ecosystems grow in scale and complexity, manual validation approaches are no longer sufficient. AI for data validation enables enterprises to detect anomalies early, protect analytics integrity, and automate quality management at scale. Organizations that invest in AI driven anomaly detection build a stronger foundation for analytics, AI, and data driven strategy.

Call to Action:

Strengthen trust in your data with intelligent automation. Explore how AI driven data validation can improve accuracy and resilience at https://dataguruanalytics.org and move toward analytics systems designed for scale and confidence.